Stability AI’s Stable Diffusion 3 is the latest version of its popular and powerful AI image-generation tool. It can deliver photorealistic results thanks to what it describes as the “most advanced text-to-image open model yet.” For those following the rise of AI image generators, one thing that Stable Diffusion 3 brings to the table – or solves – is the ability to recreate repeating patterns and, yes, human hands.

It’s impressive, and the model runs on hardware ranging from an Apple M2 Ultra to a GeForce RTX 4090-powered rig. Naturally, with its 24GB of GDDR6X memory and unlocked Ada Lovelace power, the latter is several magnitudes faster than any other “at-home” option. Still, with TensorRT acceleration and optimization, the GPU can render or generate images in real-time.

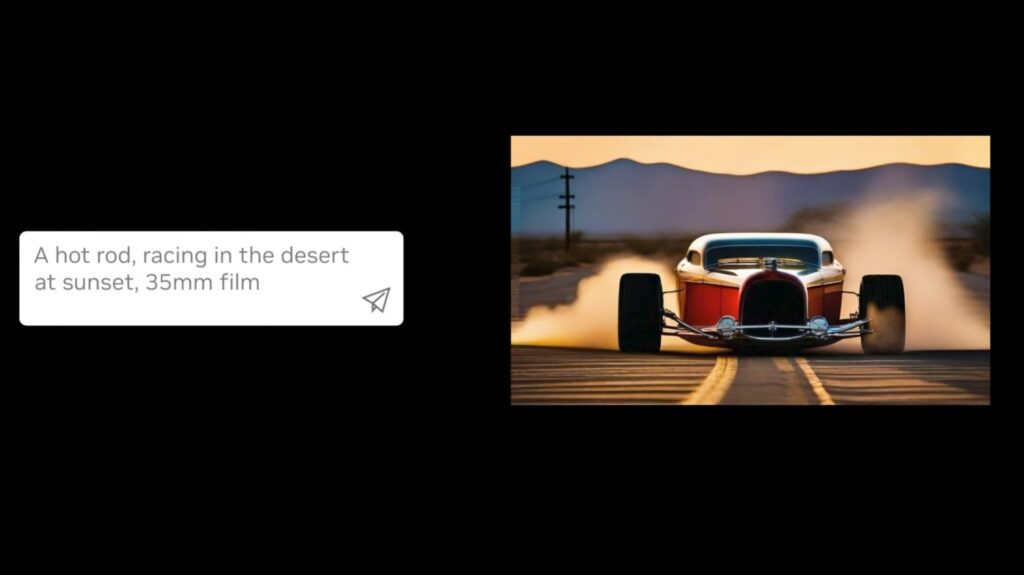

You can see this in action in NVIDIA’s video, which it presented at SIGGRAPH 2024. The video showcases SDXL Turbo generating an image of “a hot rod, racing in the desert at sunset” in real-time, adding details to the image like adding a canyon to the background as it’s being typed in. Impressive!

NVIDIA notes that this is possible due to TensorRT optimizations, which provide a 60% speedup in Stable Diffusion 3.0 performance. An NVIDIA NIM microservice for Stable Diffusion 3 with this optimized performance is available for preview on ai.nvidia.com so that you can check it out for yourself.

The performance bump also applies to generating video (around 40%), but that will take a few seconds to generate. Still, it’s impressive that we’re already at the stage where complex AI-generated images can be whipped up in real-time on a local machine. Sure, a GeForce RTX 4090 isn’t exactly a mainstream GPU, but it’s something you can find in-store and not a giant collection of hardware found in some data center somewhere.