NVIDIA had quite a lot to announce at Computex 2024, but one of the biggest teases are future-gen AI GPU architectures including something we’ve been hearing rumbles for a while now: Rubin.

NVIDIA’s beefed-up Blackwell Ultra AI GPU (source: Wccftech)

VIEW GALLERY – 4 IMAGES

NVIDIA CEO Jensen Huang revealed the next-gen Rubin GPU architecture, named after American astronomer Vera Rubin, who made significant contributions to the understanding of dark matter in the universe, while also pioneering work on galaxy rotation rate.

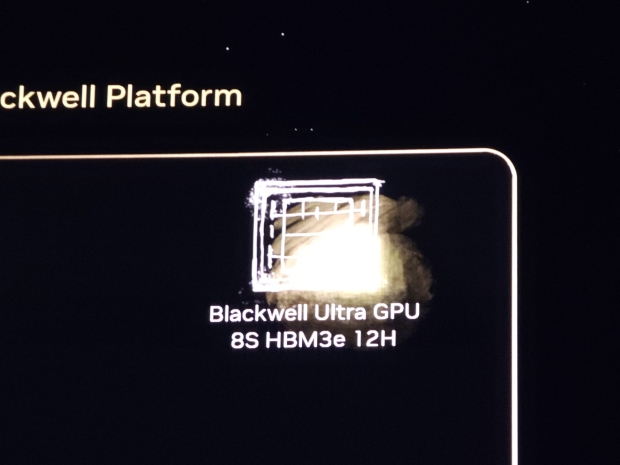

The thing is, NVIDIA hasn’t even got its new Blackwell AI GPUs out into the wild yet, but we’re already seeing Rubin announced. NVIDIA’s new Blackwell B100 and B200 AI GPUs will arrive later this year, but the company has supercharged versions that will feature 12-Hi HBM3E memory stacks across 8 sites versus the 8-Hi HBM3E memory stacks across 8 existing products, we should see these beefed-up Blackwell AI GPUs arrive in 2025.

NVIDIA’s next-gen Rubin R100 AI GPU (source: Wccftech)

Now… onto the next-gen Rubin GPU architecture. NVIDIA’s next-gen Rubin R100 AI GPU will be a member of the upcoming R-series family, and should enter mass production in Q4 2025, while systems with new Rubin AI GPUs like the DGX and HGX AI systems expected to enter mass production in the first half of 2026.

NVIDIA says that its next-gen Rubin AI GPUs and their respective platforms will be available in 2026, while a Rubin Ultra AI GPU offering will be unleashed in 2027. NVIDIA also confirmed that its next-gen Rubin AI GPUs will use next-gen HBM4 memory.

We’re expecting the next-gen NVIDIA Rubin R100 AI GPUs to use a 4x reticle design (compared to Blackwell with 3.3x reticle design) and made on TSMC’s bleeding-edge CoWoS-L packaging technology on the new N3 process node. TSMC recently talked about up to 5.5x reticle size chips arriving in 2026, featuring a 100 x 100mm substrate that would handle 12 HBM sites, versus 8 HBM sites on current-gen 80 x 80mm packages.

TSMC will be shifting to a new SoIC design that will allow larger than 8x reticle size on a bigger 120 x 120mm package configuration, but as Wccftech points out, these are still being planned, so we can probably expect somewhere around the 4x reticle size for Rubin R100 AI GPUs.

NVIDIA’s announcement of using next-gen HBM4 memory on its next-gen Rubin R100 AI GPU is a big deal, as the company is already running the very fastest HBM3E memory made on the market right now for its Blackwell B100 and B200 AI GPUs.

NVIDIA also teased an upgraded Grace CPU for the GR200 Superchip module, which will feature 2 x R100 AI GPUs and an upgraded Grace CPU based on TSMC’s new 3nm process. The current-gen Grace CPUs are made on TSMC’s current 5nm process node, with 72 cores for a total of 144 cores on the Grace Superchip solution. The next-generation Arm-based CPU solution has been confirmed, known as Vera… Rubin for the AI GPU, and Vera for the CPU. Vera Rubin gets some mad respect from NVIDIA here.