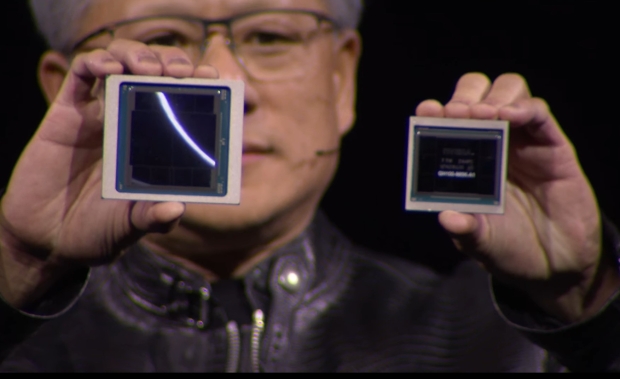

NVIDIA CEO Jensen Huang has confirmed that engineering samples of its next-gen Blackwell AI GPUs will be sent out “all over the world”.

The news comes from NVIDIA CEO Jensen Huang himself during a chat at SIGGRAPH 2024 this week, with Jensen saying: “This week, we are sending out engineering samples of Blackwell all over the world”.

SIGGRAPH is more tailored towards the “software side of things” so NVIDIA didn’t go into the nitty-gritty of its AI hardware, but it did tease that the engineering samples of Blackwell will be headed out this week. That’s a very good sign, as it shows that Blackwell AI GPUs are very, very close to being in customers’ hands.

Google, Meta, Microsoft, Amazon, and more companies have been tripping over themselves trying to buy as many Blackwell AI GPUs and GB200 AI servers as possible. NVIDIA has been scrambling to get TSMC crank up the semiconductor fab lines to their limits, and SK hynix to provide enough HBM3E memory as possible to make more AI GPUs than thought possible.

NVIDIA recently raised orders with TSMC by 25% for its next-gen Blackwell AI GPUs, as well as rumors that NVIDIA requested a dedicated packaging manufacturing line for its GPUs at TSMC, to which TSMC said no.

We’re expecting the next-gen GB200 AI server cabinets to ship in “small quantities” in Q4 2024 at the earliest, with the big waves of GB200 AI server cabinets to ship in full throughout 2025. Each of the GB200 Superchips inside of the AI server cost up to $70,000 each, while a full B200 NVL72 AI server cabinet will cost upwards of $3 million.

VIEW GALLERY – 2 IMAGES

Just in 2025 alone, NVIDIA is expected to make $210 billion from its Blackwell GB200 AI servers, which is a damn big pile of revenue for NVIDIA from just GB200 AI servers.

This is without the B100 and B200 AI GPU sales, of which there are going to be many… lots of B100 + B200 AI GPUs are going to get pumped out, purchased, and will power future systems for AI compute tasks, and more.