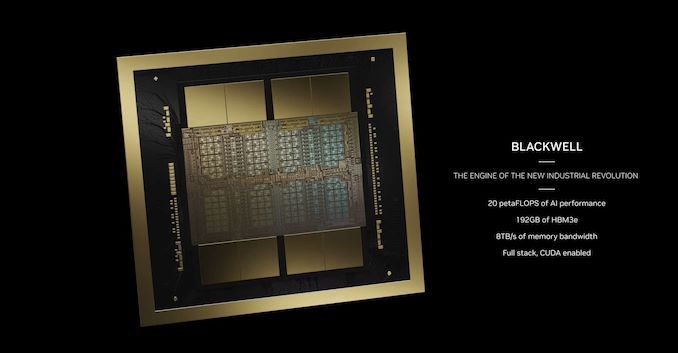

Already solidly in the driver’s seat of the generative AI accelerator market at this time, NVIDIA has long made it clear that the company isn’t about to slow down and check out the view. Instead, NVIDIA intends to continue iterating along its multi-generational product roadmap for GPUs and accelerators, to leverage its early advantage and stay ahead of its ever-growing coterie of competitors in the accelerator market. So while NVIDIA’s ridiculously popular H100/H200/GH200 series of accelerators are already the hottest ticket in Silicon Valley, it’s already time to talk about the next generation accelerator architecture to feed NVIDIA’s AI ambitions: Blackwell.

Amidst the backdrop of the first in-person GTC in 5 years – NVIDIA hasn’t held one of these since Volta was in vouge – NVIDIA CEO Jensen Huang is taking the stage to announce a slate of new enterprise products and technologies that the company has been hard at work on over the last few years. But none of these announcements are as eye-catching as NVIDIA’s server chip announcements, as it’s the Hopper architecture GH100 chip and NVIDIA’s deep software stack running on top of it that have blown the lid off of the AI accelerator industry, and have made NVIDIA the third most valuable company in the world.

But the one catch to making a groundbreaking product in the tech industry is that you need to do it again. So all eyes are on Blackwell, the next generation NVIDIA accelerator architecture that is set to launch later in 2024.

Named after Dr. David Harold Blackwell, an American statistics and mathematics pioneer, who, among other things, wrote the first Bayesian statistics textbook, the Blackwell architecture is once again NVIDIA doubling down on many of the company’s trademark architectural designs, looking to find ways to work smarter and work harder in order to boost the performance of their all-important datacenter/HPC accelerators. NVIDIA has a very good thing going with Hopper (and Ampere before it), and at a high level, Blackwell aims to bring more of the same, but with more features, more flexibility, and more transistors.

As I wrote back during the Hopper launch, “NVIDIA has developed a very solid playbook for how to tackle the server GPU industry. On the hardware side of matters that essentially boils down to correctly identifying current and future trends as well as customer needs in high performance accelerators, investing in the hardware needed to handle those workloads at great speeds, and then optimizing the heck out of all of it.” And that mentality has not changed for Blackwell. NVIDIA has improved every aspect of their chip design from performance to memory bandwidth, and each and every element is targeted at improving performance in a specific workload/scenario or removing a bottleneck to scalability. And, once again, NVIDIA is continuing to find more ways to less work altogether.

Ahead of today’s keynote (which by the time you’re reading this, should still be going on), NVIDIA offered the press a limited pre-briefing on the Blackwell architecture and the first chip to implement it. I say “limited” because there are a number of key specifications the company is not revealing ahead of the keynote, and even the name of the GPU itself is unclear; NVDIA just calls it the “Blackwell GPU”. But here is a rundown of what we know so far about the heart of the next generation of NVIDIA accelerators.

| NVIDIA Flagship Accelerator Specification Comparison | |||||

| B200 | H100 | A100 (80GB) | |||

| FP32 CUDA Cores | A Whole Lot | 16896 | 6912 | ||

| Tensor Cores | As Many As Possible | 528 | 432 | ||

| Boost Clock | To The Moon | 1.98GHz | 1.41GHz | ||

| Memory Clock | 8Gbps HBM3E | 5.23Gbps HBM3 | 3.2Gbps HBM2e | ||

| Memory Bus Width | 2x 4096-bit | 5120-bit | 5120-bit | ||

| Memory Bandwidth | 8TB/sec | 3.35TB/sec | 2TB/sec | ||

| VRAM | 192GB (2x 96GB) |

80GB | 80GB | ||

| FP32 Vector | ? TFLOPS | 67 TFLOPS | 19.5 TFLOPS | ||

| FP64 Vector | ? TFLOPS | 34 TFLOPS | 9.7 TFLOPS (1/2 FP32 rate) |

||

| FP4 Tensor | 9 PFLOPS | N/A | N/A | ||

| INT8/FP8 Tensor | 4500 T(FL)OPS | 1980 TOPS | 624 TOPS | ||

| FP16 Tensor | 2250 TFLOPS | 990 TFLOPS | 312 TFLOPS | ||

| TF32 Tensor | 1100 TFLOPS | 495 TFLOPS | 156 TFLOPS | ||

| FP64 Tensor | 40 TFLOPS | 67 TFLOPS | 19.5 TFLOPS | ||

| Interconnect | NVLink 5 18 Links (1800GB/sec) |

NVLink 4 18 Links (900GB/sec) |

NVLink 3 12 Links (600GB/sec) |

||

| GPU | “Blackwell GPU” | GH100 (814mm2) |

GA100 (826mm2) |

||

| Transistor Count | 208B (2x104B) | 80B | 54.2B | ||

| TDP | 1000W | 700W | 400W | ||

| Manufacturing Process | TSMC 4NP | TSMC 4N | TSMC 7N | ||

| Interface | SXM | SXM5 | SXM4 | ||

| Architecture | Blackwell | Hopper | Ampere | ||

Tensor throughput figures for dense/non-sparse operations, unless otherwise noted

The first thing to note is that the Blackwell GPU is going to be big. Literally. The B200 modules that it will go into will feature two GPU dies on a single package. That’s right, NVIDIA has finally gone chiplet with their flagship accelerator. While they are not disclosing the size of the individual dies, we’re told that they are “reticle-sized” dies, which should put them somewhere over 800mm2 each. The GH100 die itself was already approaching TSMC’s 4nm reticle limits, so there’s very little room for NVIDIA to grow here – at least without staying within a single die.

Curiously, despite these die space constraints, NVIDIA is not using a TSMC 3nm-class node for Blackwell. Technically they are using a new node – TSMC 4NP – but this is just a higher performing version of the 4N node used for the GH100 GPU. So for the first time in ages, NVIDIA is not getting to tap the performance and density advantages of a major new node. This means virtually all of Blackwell’s efficiency gains have to come from architectural efficiency, while a mix of that efficiency and the sheer size of scaling-out will deliver Blackwell’s overall performance gains.

Despite sticking to a 4nm-class node, NVIDIA has been able to squeeze more transistors into a single die. The transistor count for the complete accelerator stands at 208B, or 104B transistors per die. GH100 was 80B transistors, so each B100 die has about 30% more transistors overall, a modest gain by historical standards. Which in turn is why we’re seeing NVIDIA employ more dies for their complete GPU.

For their first multi-die chip, NVIDIA is intent on skipping the awkward “two accelerators on one chip” phase, and moving directly on to having the entire accelerator behave as a single chip. According to NVIDIA, the two dies operate as “one unified CUDA GPU”, offering full performance with no compromises. Key to that is the high bandwidth I/O link between the dies, which NVIDIA terms NV-High Bandwidth Interface (NV-HBI), and offers 10TB/second of bandwidth. Presumably that’s in aggregate, meaning the dies can send 5TB/second in each direction simultaneously.

What hasn’t been detailed thus far is the construction of this link – whether NVIDIA is relying on Chip-on-Wafer-on-Substrate (CoWoS) throughout, using a base die strategy (AMD MI300), or if they’re relying on a separate local interposer just for linking up the two dies (ala Apple’s UltraFusion). Either way, this is significantly more bandwidth than any other two-chip bridge solution we’ve seen thus far, which means a whole lot of pins are in play.

On B200, each die is being paired with 4 stacks of HBM3E memory, for a total of 8 stacks altogether, forming an effective memory bus width of 8192-bits. One of the constraining factors in all AI accelerators has been memory capacity (not to undersell the need for bandwidth as well), so being able to place down more stacks is huge in improving the accelerator’s local memory capacity. Altogether, B200 offers 192GB of HBM3E, or 24GB/stack, which is identical to the 24GB/stack capacity of H200 (and 50% more memory than the original 16GB/stack H100).

According to NVIDIA, the chip has an aggregate HBM memory bandwidth of 8TB/second, which works out to 1TB/second per stack – or a data rate of 8Gbps/pin. As we’ve noted in our previous HBM3E coverage, the memory is ultimately designed to go to 9.2Gbps/pin or better, but we often see NVIDIA play things a bit conservatively on clockspeeds for their server accelerators. Either way, this is almost 2.4x the memory bandwidth of the H100 (or 66% more than the H200), so NVIDIA is seeing a significant increase in bandwidth.

Finally, for the moment we don’t have any information on the TDP of a single B200 accelerator. Undoubtedly, it’s going to be high – you can’t more than double your transistors in a post-Dennard world and not pay some kind of power penalty. NVIDIA will be selling both air cooled DGX systems and liquid-cooled NVL72 racks, so B200 is not beyond air cooling, but pending confirmation from NVIDIA, I am not expecting a small number.

Overall, compared to H100 at the cluster level, NVIDIA is targeting a 4x increase in training performance, and an even more massive 30x increase in inference performance, all the while doing so with 25x greater energy efficiency. We’ll cover some of the technologies behind this as we go, and more about how NVIDIA intends to accomplish this will undoubtedly be revealed as part of the keynote.

But the most interesting takeaway from those goals is the interference performance increase. NVIDIA currently rules the roost on training, but inference is a much wider and more competitive market. However, once these large models are trained, even more compute resources will be needed to execute them, and NVIDIA doesn’t want to be left out there. But that means finding a way to take (and keep) a convincing lead in a far more cutthroat market, so NVIDIA has their work cut out for them.

Second-Generation Transformer Engine: Even Lower Precisions

One of NVIDIA’s big wins with Hopper, architecturally speaking, was their decision to optimize their architecture for transformer-type models with the inclusion of specialized hardware – which NVIDIA calls their Transformer Engine. By taking advantage of the fact that transformers don’t need to process all of their weighs and parameters at a high precision (FP16), NVIDIA added support for mixing those operations with lower precision (FP8) operations to cut down on memory needs and improve throughput. This is a decision that paid off very handsomely when GPT-3/ChatGPT took off later in 2022, and the rest is history.

For their second generation transformer engine, then, NVIDIA is going to limbo even lower. Blackwell will be able to handle number formats down to FP4 precision – yes, a floating point number format with just 16 states – with an eye towards using the very-low precision format for inference. Meanwhile, NVIDIA is eyeing doing more training at FP8, which again keeps compute throughput high and memory consumption low.

Transformers have shown an interesting ability to handle lower precision formats without losing too much in the way of accuracy. But FP4 is quite low, to say the least. So absent further information, I am extremely curious how NVIDIA and its users intend to hit their accuracy needs with such a low data precision, as FP4 being useful for inference would seem to be what will make or break B200 as an inference platform.

In any case, NVIDIA is expecting a single B200 accelerator to be able to offer up to 10 PetaFLOPS of FP8 performance – which assuming the use of sparsity, is about 2.5x H100’s rate – and an even more absurd 20 PFLOPS of FP4 performance for inference. H100 doesn’t even benefit from FP4, so compared to its minimum FP8 data size, B200 should offer a 5x increase in raw inference throughput when FP4 can be used.

And assuming NVIDIA’s compute performance ratios remain unchanged from H100, with FP16 performance being half of FP8, and scaling down from there, B200 stands to be a very potent chip at higher precisions as well. Though at least for AI uses, clearly the goal is to try to get away with the lowest precision possible.

At the other end of the spectrum, what also remains undisclosed ahead of the keynote address is FP64 tensor performance. NVIDIA has offered FP64 tensor capabilities since their Ampere architecture, albeit at a much reduced rate compared to lower precisions. This is of little use for the vast majority of AI workloads, but is beneficial for HPC workloads. So I am curious to see what NVIDIA has planned here – if B200 will have much in the way of HPC chops, or if NVIDIA intends to go all-in on low precision AI.

This is breaking news. Additional details to follow