Microsoft is finally ready to enter the custom AI hardware race, a chip market in which NVIDIA has at least a 75 percent market share. At this year’s Hot Chips conference, the company unveiled its first AI accelerator, Maia 100, built on TSMC’s 5nm process node.

VIEW GALLERY – 3 IMAGES

Designed to “optimize performance and reduce costs,” Maia 100’s architecture includes custom server boards, racks, and software for running AI services like Microsoft’s Azure OpenAI Services.

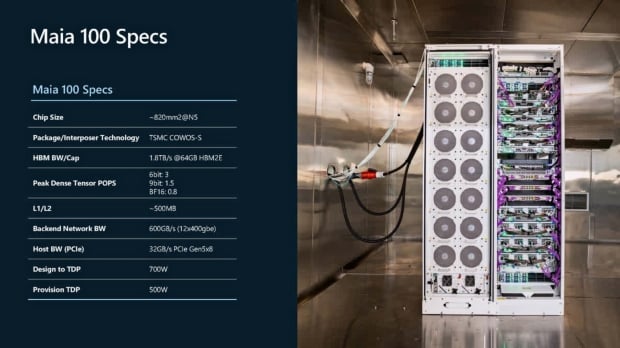

“The Maia 100 accelerator is purpose-built for a wide range of cloud-based AI workloads,” Microsoft’s technical blog on Maia 100 details. “The chip measures out at ~820mm2 and utilizes TSMC’s N5 process with COWOS-S interposer technology. Equipped with large on-die SRAM, Maia 100’s reticle-size SoC die, combined with four HBM2E die, provide a total of 1.8 terabytes per second of bandwidth and 64 gigabytes of capacity to accommodate AI-scale data handling requirements.”

Provisioned at 500W, the AI accelerator is also designed to support up to 700W, which is efficient for high-performance AI workloads. Maia 100’s architecture includes a high-speed 16xRx16 tensor unit for training and inference, with a vector processor supporting a wide range of data types, including FP32 and BF16.

The 64GB of HBM2E memory might look like a step down from the 80GB found in NVIDIA’s H100 chip, not to mention the 192GB of HBM3E coming with B200, but Maia 100 is squarely focused on cost and efficiency for its AI processing capabilities. On the software front, the Maia SDK allows developers to port models previously created in Pytorch and Triton and offers scheduling and device management tools.

“With its advanced architecture, comprehensive developer tools, and seamless integration with Azure, the Maia 100 is revolutionizing the way Microsoft manages and executes AI workloads,” Microsoft says. “Through the algorithmic co-design or hardware with software, built-in hardware optionality for both model developers and custom kernel authors, and a vertically integrated design to optimize performance and improve power efficiency while reducing costs, Maia 100 offers a new option for running advanced cloud-based AI workloads on Microsoft’s AI infrastructure.”

For a detailed look at Maia 100’s architecture, check out Microsoft’s technical ‘Inside Maia 100‘ post.