Micron has announced that it has sold out of its HBM3E memory supply for 2024, and that most of its HBM3E memory has been allocated for 2025.

VIEW GALLERY – 2 IMAGES

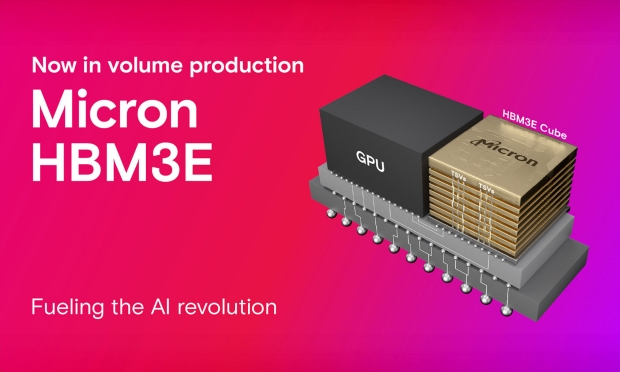

We are to expect Micron’s latest HBM3E memory to be inside of NVIDIA’s beefed-up H200 AI GPU, with the US company competing against HBM rivals in South Korea with Samsung and SK hynix. Micron CEO Sanjay Mehrotra talked about HBM supply in a recent earnings call, where we’re finding out the new information.

Sanjay Mehrotra, chief executive of Micron, said: “Our HBM is sold out for calendar 2024, and the overwhelming majority of our 2025 supply has already been allocated. We continue to expect HBM bit share equivalent to our overall DRAM bit share sometime in calendar 2025. We are on track to generate several hundred million dollars of revenue from HBM in fiscal 2024 and expect HBM revenues to be accretive to our DRAM and overall gross margins starting in the fiscal third quarter“.

Mehrotra continued: “The ramp of HBM production will constrain supply growth in non-HBM products. Industrywide, HBM3E consumes approximately three times the wafer supply as DDR5 to produce a given number of bits in the same technology node“.

Micron also said it has begun sampling its new HBM3E 12-Hi stacks, which increase memory capacity by 50% and turn AI GPUs into even faster AI GPU monsters. Micron’s new 36GB HBM3E 12-Hi stacks will be used in next-gen AI GPUs with production to begin ramping in 2025.