How to Train LLMs to “Think” (o1 & DeepSeek-R1) | Towards Data Science

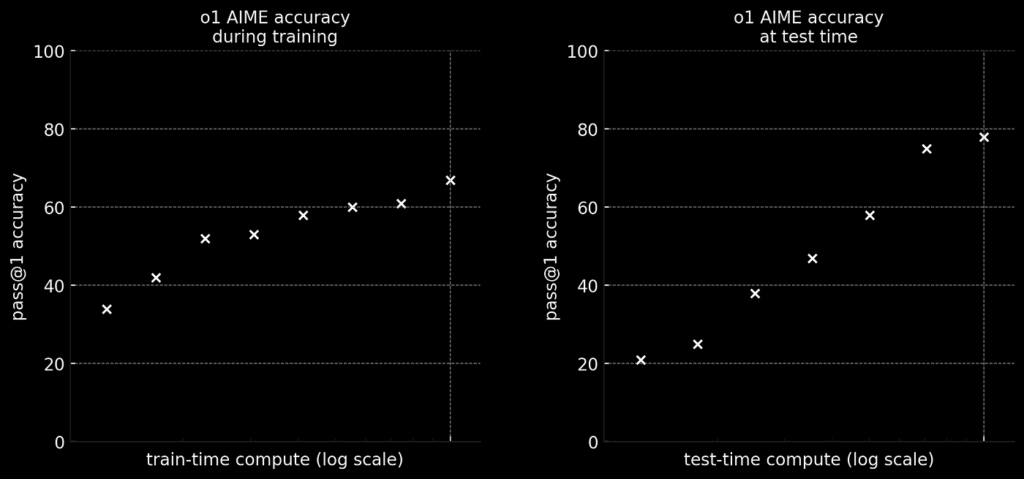

In September 2024, OpenAI released its o1 model, trained on large-scale reinforcement learning, giving it “advanced reasoning” capabilities. Unfortunately, the details of how they pulled this off were never shared publicly. Today, however, DeepSeek (an AI research lab) has replicated this reasoning behavior and published the full technical details of their approach. In this article, I will discuss the key ideas behind this innovation and describe how they work under the hood. [embedded content] OpenAI’s