We’ve covered NVIDIA ACE technologies a few times at TweakTown, a toolset and set of AI-powered technologies that can bring digital humans to life. As seen in the Covert Protocol tech demo, AI creates and generates personalities and dialogue, converts text-to-speech, generates facial animations, and more. For game developers and gamers, it’s a potential game changer for player interaction and immersion in a digital world.

VIEW GALLERY – 2 IMAGES

However, when it comes to NVIDIA ACE, the generative AI tools available to developers aren’t merely there to add AI-generated characters into a game powered by the Nemotron Mini 4B model that can run locally on GeForce RTX 40 Series GPU.

Audio2Face-3D is a brilliant tool in its own right. It is a plugin that uses audio to generate accurate lip-synching and facial animation for characters.

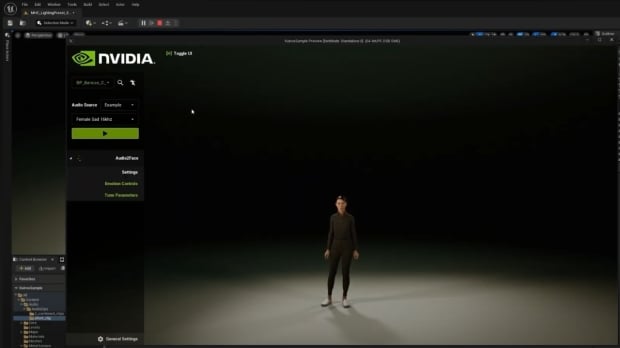

Easily integrated into Autodesk Maya and Unreal Engine 5, NVIDIA has created a Unreal Engine 5 sample project that serves as a guide for developers looking to use ACE and add Digital Humans to their games and applications.

“As a developer, you can build a database for your intellectual property, generate relevant responses at low latency, and have those responses drive corresponding MetaHuman facial animations seamlessly in Unreal Engine 5,” NVIDIA’s Ike Nnoli writes. “Each of these microservices are optimized to run on Windows PCs with low latency and a minimal memory footprint.”

Accurate facial animation is a tiny part of the bigger picture, as Retrieval-augmented generation (RAG) allows AI models and characters to be aware of conversational history and context. These detailed Digital Humans or MetaHuman characters in Unreal Engine 5 require a lot of AI horsepower to run so they can sit in the cloud.