|

Listen to this article |

As robots become more self-sufficient, they have to navigate their surroundings with greater independence and reliability. | Credit: PointOne Nav

As robots become more self-sufficient, they have to navigate their surroundings with greater independence and reliability. Autonomous tractors, agricultural harvesters, and seeding machines must carefully make their way through crop fields while self-driving delivery vehicles must safely traverse the streets to place packages in the correct spot. Across a wide range of applications, autonomous mobile robots (AMRs) require highly accurate sources of positioning to safely and successfully complete the jobs for which they are designed.

Accomplishing such precision requires two sets of location capabilities. One is to understand the relative position of itself to other objects. This provides critical input to understand the world around it and, in the most obvious case, avoid obstacles that are both stationary and under motion. This dynamic maneuvering requires an extensive stack of navigational sensors like cameras, radar, lidar, and the supporting software to process these signals and give real-time direction to the AMR.

The second set of capabilities is for the AMR to understand its precise physical location (or absolute location) in the world so it can precisely and repeatedly navigate a path that was programmed into the device. An obvious use case here is high precision agriculture, where various AMRs need to travel down the same narrow path over the course of many months to plant, irrigate, and harvest crops, with every pass requiring the AMR to reference the same exact spot each time.

This requires a different set of navigational capabilities, starting with Global Navigation Satellite Systems (GNSS), which the entire ecosystem of sensors and software leverage. Augmenting GNSS are corrections capabilities like RTK and SSR that help drive 100x higher precision than GNSS alone for open-sky applications, and Inertial Measurement Units combined with sensor fusion software for navigating where GNSS is not available (dead reckoning).

Before we dive into these technologies, let us spend a minute looking at use cases where both relative and absolute locations are required for an AMR to do its job.

Robotics applications requiring relative and absolute positioning

AMRs reveal what humans take for granted — the innate ability to accurately locate oneself in the world and take precise action based on that information. The more varied the applications for AMRs, the more we discover what types of actions require extreme precision. Some examples include:

- Agricultural Automation: In agriculture, AMRs are becoming increasingly common for tasks like planting, harvesting, and crop monitoring. These robots utilize absolute positioning, typically through GPS, to navigate large and often uneven fields with precision. This ensures that they can cover vast areas systematically and return to specific locations as needed. However, once in the proximity of crops or within a designated area, AMRs rely on relative positioning for tasks that demand a higher level of accuracy, such as picking fruit that may have grown or changed position since the AMR last visited it. By combining both positioning methods, these robots can operate efficiently in the challenging and variable environments typical of agricultural fields.

- Last-Mile Delivery in Urban Settings: AMRs are transforming last-mile delivery in urban environments by autonomously transporting goods from distribution centers to final destinations. These robots use absolute positioning to navigate city streets, alleys, and complex urban layouts, ensuring they follow optimized routes while avoiding traffic and adhering to delivery schedules. Upon reaching the vicinity of the delivery location, the AMRs will also use relative positioning to maneuver around variable or unexpected obstacles, such as a vehicle that is double parked on the street. This dual approach enables the AMRs to handle the intricacies of urban landscapes and make precise deliveries directly to customers’ doorsteps.

- Construction Site Automation: On construction sites, AMRs are employed to ensure the project is built to the exact specifications that were designated by the engineers. They also help with tasks like transportation of materials and mapping or surveying of environments. These sites often span large areas with constantly changing environments, requiring AMRs to use absolute positioning to navigate and maintain orientation within the overall project site. Relative positioning comes into play when AMRs perform tasks that require interaction with dynamic elements, such as avoiding other equipment or even personnel on the site. The combination of both positioning systems allows AMRs to effectively contribute to the complex and dynamic nature of construction projects, enhancing efficiency and safety.

- Autonomous Road Maintenance: AMRs are increasingly being used in road maintenance tasks such as pavement inspection, crack sealing, and line painting. These robots utilize absolute positioning to travel along stretches of highway or roadways, ensuring they stay on course over long distances and can precisely capture the specific locations where maintenance needs to occur. When performing these maintenance tasks, they switch to relative positioning to accurately identify and address specific road imperfections, paint lane markings with precision, or navigate around obstacles. This dual capability allows AMRs to efficiently manage road maintenance tasks while reducing the need for human workers to operate in hazardous roadside environments, improving safety and productivity.

- Environmental Monitoring and Conservation: In outdoor environments, AMRs are often deployed for environmental monitoring and conservation efforts such as wildlife tracking, pollution detection, and habitat mapping. These robots leverage absolute positioning to navigate vast natural areas, from forests to coastal regions, ensuring comprehensive coverage of the terrain and allowing for the capture of detailed site surveys and mapping. AMRs can perform tasks like capturing high-resolution images, collecting samples, or tracking animal movements with pinpoint accuracy and can overlay these samples over time in a cohesive way.

In all of the above examples, absolute positioning accuracy of much less than a meter is required to avoid potentially catastrophic consequences. Worker injuries, substantial product losses, and costly delays are all likely without precise location. Essentially, Anywhere an AMR needs to operate within a few centimeters will require it to have both relative and absolute location solutions.

Faction’s self-driving delivery cars rely on a complex array of sensors, including GNSS and Point One’s RTK network, to safely navigate their routes. | Credit: PointOne Nav

Technology for relative positioning

AMRs leverage a number of sensors to locate themselves in relation to other objects in their environment. These include:

- Cameras: Cameras function as the visual sensors of autonomous mobile robots, providing them with an immediate picture of their surroundings similar to the way human eyes work. These devices capture rich visual information that robots can use for object detection, obstacle avoidance, and environment mapping. However, cameras are dependent on adequate lighting and can be hampered by adverse weather conditions like fog, rain, or darkness. To address these limitations, cameras are often paired with near-infrared sensors or equipped with night vision capabilities, which allow the robots to see in low-light conditions. Cameras are a key component in visual odometry, a process where changes in position over time are calculated by analyzing sequential camera images. In general, cameras always require significant processing to convert their 2-D images into 3-D structures.

- Radar Sensors: Radar sensors operate by emitting pulsating radio waves that reflect off objects, providing information about the object’s speed, distance, and relative position. This technology is robust and can function effectively in various environmental conditions, including rain, fog, and dust, where cameras and lidar might struggle. However, radar sensors typically offer sparser data and lower resolution compared to other sensor types. Despite this, they are invaluable for their reliability in detecting the velocity of moving objects, making them particularly useful in dynamic environments where understanding the movement of other entities is critical.

- Lidar Sensors: Lidar, or Light Detection and Ranging, is a sensor technology that uses laser pulses to measure distances by timing the reflection of light off objects. By scanning the environment with rapid laser pulses, lidar creates highly accurate, detailed 3D maps of the surroundings. This makes it an essential tool for simultaneous location and mapping (SLAM), where the robot builds a map of an unknown environment while keeping track of its location within that map. lidar is known for its precision and ability to function well in various lighting conditions, though it can be less effective in rain, snow, or fog, where water droplets can scatter the laser beams. Despite being an expensive technology, lidar is favored in autonomous navigation due to its accuracy and reliability in complex environments.

- Ultrasonic Sensors: Ultrasonic sensors function by emitting high-frequency sound waves that bounce off nearby objects, with the sensor measuring the time it takes for the echo to return. This allows the robot to calculate the distance to objects and obstacles in its path. These sensors are particularly useful for short-range detection and are often employed in slow, close-range activities such as navigating within tight spaces like warehouse aisles, or for precise maneuvers like docking or backing up. Ultrasonic sensors are cost-effective and work well in a variety of conditions, but their limited range and slower response time compared to lidar and cameras mean they are best suited for specific, controlled environments where high precision at close proximity is required.

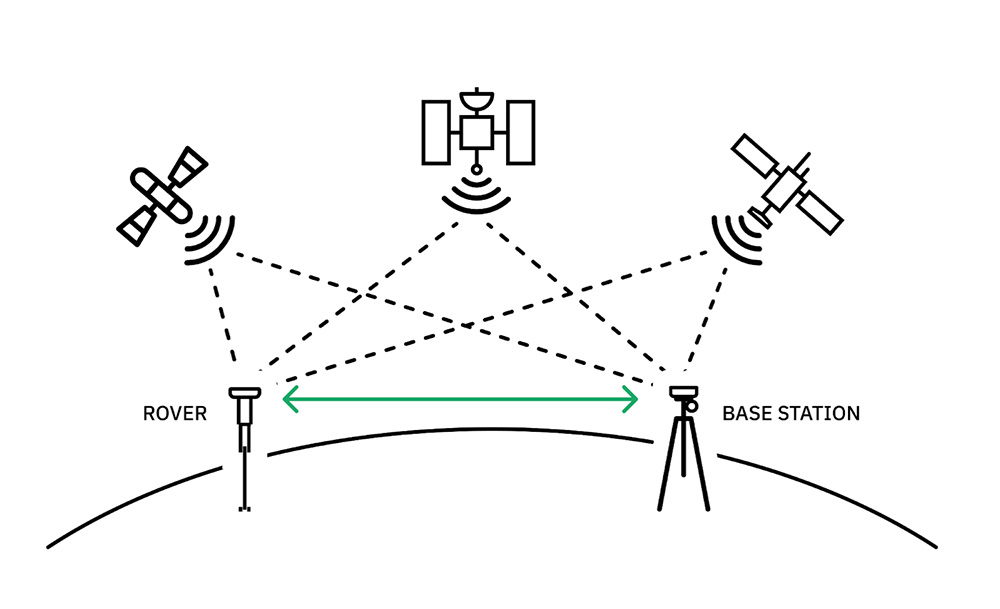

RTK relies on known base stations with fixed positions to correct any errors in GNSS receiver positioning estimates. | Credit: PointOne Nav

The baseline technology used for absolute positioning starts with GNSS (the term that includes GPS and other satellite systems like GLONASS, Galileo, and BeiDou). Given that GNSS is affected by atmospheric conditions and satellite inconsistencies, it can give a position solution that is off by many meters. For AMRs that require more precise navigation, this is not good enough – thus the emergence of a technology known as GNSS Corrections which narrows this error down to as low as one centimeter.

- RTK: Real-time kinematic (RTK) uses a network of base stations with known positions as reference points for correcting GNSS receiver location estimates. As long as the AMR is within 50 kilometers of a base station and has a reliable communication link, RTK can reliably provide 1–2-centimeter accuracy.

- SSR or PPP-RTK: State Space Representation (SSR), which is also sometimes called PPP-RTK, leverages information from the base station network, but instead of sending corrections directly from a local base station, it models the errors across a wide geographical area. The result is broader coverage allows distances far beyond 50km from a base station, but accuracy drops to 3-10 centimeters or more depending on the density and quality of the network.

While these two approaches work exceptionally well where GNSS signals are available (generally open sky), many AMRs will travel away from the open sky, where there is an obstruction between the GNSS receiver on the AMR and the sky. This can happen in tunnels, parking garages, orchards, and urban environments. This is where Inertial Navigation Systems (INS) come into play with their Inertial Measurement Unit (IMU) and Sensor Fusion software.

- IMU – An IMU combines accelerometers, gyroscopes, and sometimes magnetometers to measure a system’s linear acceleration, angular velocity, and magnetic field strength, respectively. This is crucial data that enables an INS to determine the position, velocity, and orientation of an object relative to a starting point in real-time.

The history of the IMU dates back to the early 20th century, with its roots in the development of gyroscopic devices used in navigation systems for ships and aircraft. The first practical IMUs were developed during World War II, primarily for use in missile guidance systems and later in the space program. The Apollo missions, for example, relied heavily on IMUs for navigation in space, where traditional navigation methods were not feasible. Over the decades, IMU technology has advanced significantly, driven by the miniaturization of electronic components and the advent of Micro-Electro-Mechanical Systems (MEMS) technology in the late 20th century. This evolution has led to more compact, affordable, and accurate IMUs, enabling their integration into a wide range of consumer electronics, automotive systems, and industrial applications today.

- Sensor Fusion – Sensor fusion software is responsible for combining data from the IMU, as well as other sensors to create a cohesive and accurate understanding of an AMR’s absolute location when GNSS is not available. The most basic implementations “fill in the gaps” in real-time, between when the GNSS signal is dropped and when it is picked back up again by the AMR. The accuracy of sensor fusion software depends on several factors, including the quality and calibration of the sensors involved, the algorithms used for fusion, and the specific application or environment in which it is deployed. More sophisticated sensor fusion software is able to cross-correlate different sensor modalities, resulting in superior positional accuracy than from any one of the sensors in the solution working alone.

Choosing the best RTK network for your autonomous robots

Point One’s Polaris RTK network features more than 1,700 base stations across the world, providing one of the most reliable sources of positioning corrections. | Credit: PointOne Nav

RTK for GNSS provides a highly accurate source of absolute location for autonomous robots. Without RTK, however, many robotics applications simply are not possible or practical. From construction survey rovers to autonomous delivery drones and autonomous agriculture tools, numerous AMRs depend on the centimeter-accurate absolute positioning that only RTK can provide.

That said, an RTK solution is only as good as the network behind it. Consistently reliable corrections require a highly dense network of base stations so that receivers are always within close enough range for accurate error corrections. The larger the network, the easier it is to get corrections for AMRs from anywhere. Density alone is not the only factor. Networks are highly complicated real-time systems and require professional monitoring, surveying, and integrity checking to ensure the data being sent to the AMR is accurate and reliable.

What does all of this mean for the developers of autonomous robots? At least where outdoor applications are concerned, no AMR is complete without an RTK-powered GNSS receiver. For the most accurate solution possible, developers should rely on the densest and most reliable RTK network. And where robots must move frequently in and out of ideal GNSS signal environments, such as for a self-driving delivery vehicle, RTK combined with an IMU provides the most comprehensive source of absolute positioning available.

No two autonomous robotics applications are the same, and each unique setup requires its own mix of relative and absolute positioning information. For the outdoor AMRs of tomorrow, however, GNSS with a robust RTK corrections network is an essential component of the sensor stack.

Aaron Nathan is the founder and CEO of Point One Navigation, an entrepreneur and technical leader with over a decade of experience in cutting-edge robotics and critical software and hardware development. He has founded two venture-backed startups and has deep domain experience in sensor fusion, computer vision, navigation, and embedded systems, specifically in the context of self-driving vehicles and other robotic applications. Point One Navigation offers the first centimeter-accurate positioning platform designed for today’s most demanding applications, including the complex task of ensuring safe and effective AMRs. Point One’s Atlas INS provides real-time, precise positioning for a variety of autonomous robotics applications, using its best-in-class sensor-fusion algorithms.