Samsung is rumored to tape-out its next-generation HBM4 memory in Q4 2024, with mass production of HBM4 expected by the end of 2025.

VIEW GALLERY – 2 IMAGES

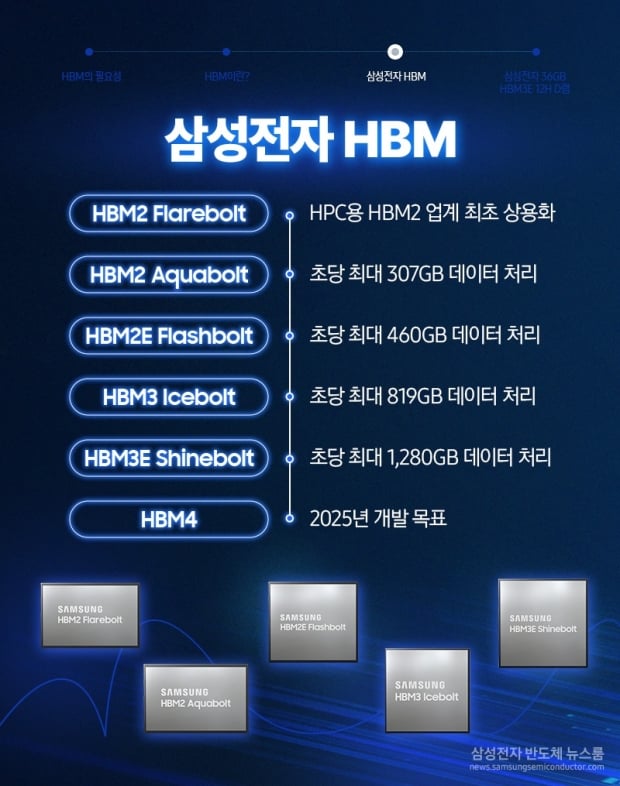

The AI industry is fueling the growth of HBM memory, with HBM makers SK hynix, Samsung, and Micron pumping out as much as it can, and it hasn’t been enough. SK hynix is sold out of all of its HBM3 and HBM3E for both 2024 and 2025, and with HBM4 on the horizon, all things are leading to who can be the biggest HBM4 supplier to AI GPU companies like NVIDIA and AMD.

NVIDIA’s next-generation Rubin R100 AI GPU uses HBM4 memory, so SK hynix and Samsung are both doing everything they can (spending many tens of billions of dollars, setting up and expanding semiconductor fabs in South Korea, Taiwan, and the United States) to pump more HBM memory (HBM3, HBM3E, HBM4, and even HBM4E) for 2025 and beyond.

The Elec reports that Samsung has started preliminary work on HBM4, and that it’s expected to tape-out in Q4 2024 — tape-out means the product (so, HBM4) has its designed and production methodologies finalized — with sampling of Samsung’s new HBM4 memory expected to begin soon, with NVIDIA and AMD (especially NVIDIA) rubbing its hands together in (AI) glee.

We’ve previously reported that Samsung will be manufacturing its logic dies for next-gen HBM4 memory using its in-house 4nm process node. The logic die itself sits at the base of the stacks of dies and is one of the core components of an HBM chips used on AI GPUs.

SK hynix, Samsung, and Micron all make HBM, with logic dies used on the latest HBM3E memory, but the new HBM4 memory of the future requires a foundry process that’s ready with customized functions required by AI chip makers like NVIDIA, AMD, and Intel, and more.

The new 4nm process node is Samsung’s signature chip foundry manufacturing process, with yields of over 70%. Samsung uses the 4nm process for its new Exynos 2400 chipset, which powers Samsung’s new fleet of Galaxy S24 family AI smartphones.

Samsung has been using its 7nm process node since 2019, with the company expected to use 7nm or 8nm foundry processes to produce its HBM4 logic dies, but it seems all signs are pointing to the newer 4nm process node. SK hynix has been dominating the HBM memory business alongside NVIDIA over the last couple of years, diving into a huge partnership with TSMC to produce next-generation HBM4 memory.

NVIDIA’s next-generation Blackwell B100 and B200 AI GPUs will use the newer HBM3E memory standard, but the company has already revealed its future-gen Rubin R100 AI GPU that will use ultra-fast HBM4 memory, with Rubin R100 AI GPUs to drop in Q4 2025